FeaCo: Reaching Robust Feature-Level Consensus in Noisy Pose Conditions

Published:

Recommended citation:

Gu J, Zhang J, Zhang M, et al. FeaCo: Reaching Robust Feature-Level Consensus in Noisy Pose Conditions[C]//Proceedings of the 31st ACM International Conference on Multimedia. 2023: 3628-3636.

https://dl.acm.org/doi/pdf/10.1145/3581783.3611880

Abstract

Collaborative perception offers a promising solution to overcome challenges such as occlusion and long-range data processing. However, limited sensor accuracy leads to noisy poses that misalign observations among vehicles. To address this problem, we propose the FeaCo, which achieves robust Feature-level Consensus among collaborating agents in noisy pose conditions without additional training. We design an efficient Pose-error Rectification Module (PRM) to align derived feature maps from different vehicles, reducing the adverse effect of noisy pose and bandwidth requirements. We also provide an effective multi-scale Cross-level Attention Module (CAM) to enhance information aggregation and interaction between various scales. Our FeaCo outperforms all other localization rectification methods, as validated on both the collaborative perception simulation dataset OPV2V and real-world dataset V2V4Real, reducing heading error and enhancing localization accuracy across various error levels.

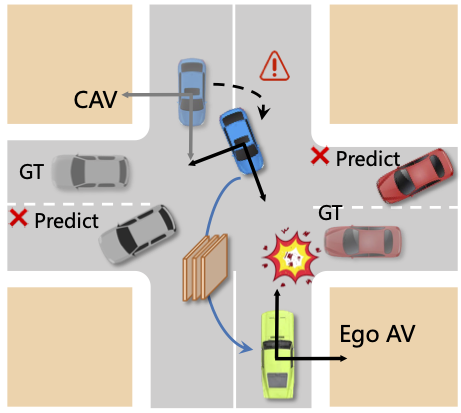

Problem Illustration

The green vehicle and blue vehicle represent the ego AV and the CAV, respectively. If the pose of the CAV is incorrectly col- lected due to noise, the predicted position of the observed red vehicle will be biased. This inaccurate prediction can cause a collision between the ego AV and the observed vehicle.

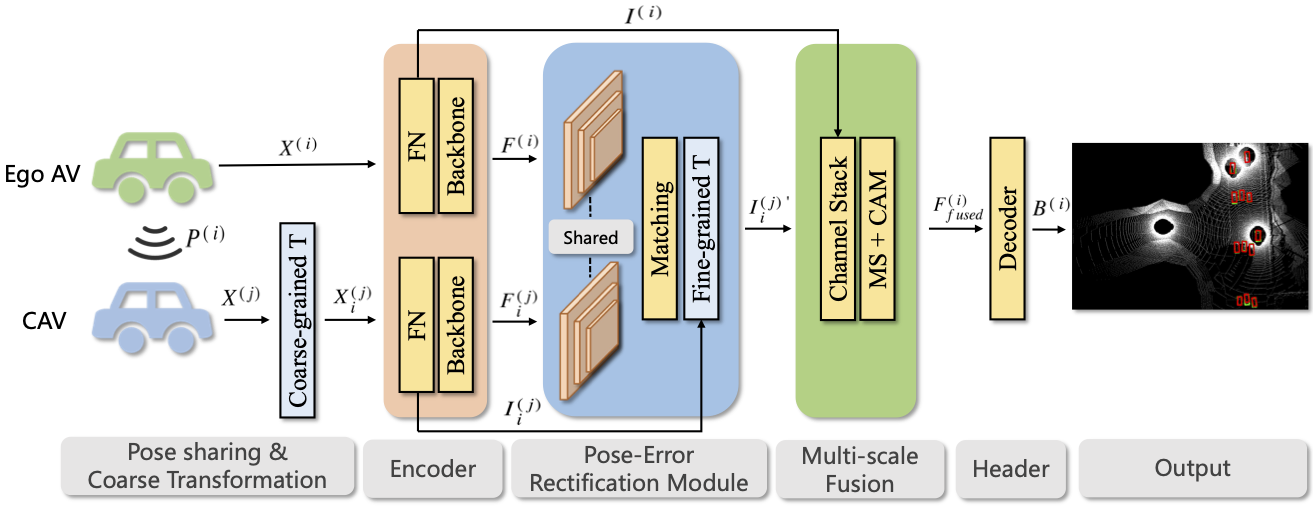

FeaCo: Pose-robust Feature-level Collaborative Framework

After coarse-grained transformation, misaligned features are extracted by respective encoders and sent to Pose-Error Rectification Module (PRM) to generate fine-grained matrices for each CAV. Then, aligned features are derived by second transformation and aggregated through multi-scale fusion with Cross-level Attention Module (CAM). Through ego AV’s detection head, predictions are obtained and maintain precision and robustness in noisy pose conditions.

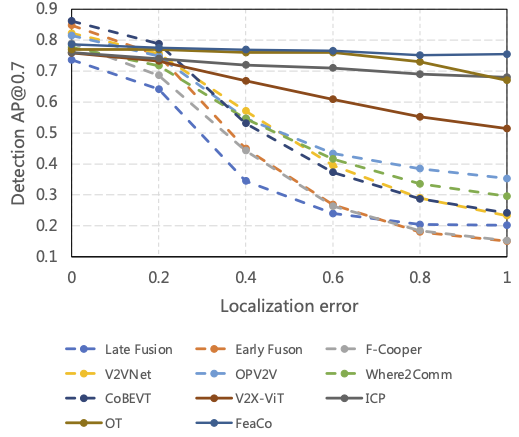

Experiments Results

3D detection results on OPV2V dataset with various level of localization error. Results obtained by methods without robust designs are shown with dashed lines while results of methods with robust designs are shown in solid lines. Proposed method outperforms other methods with or without robust designs.

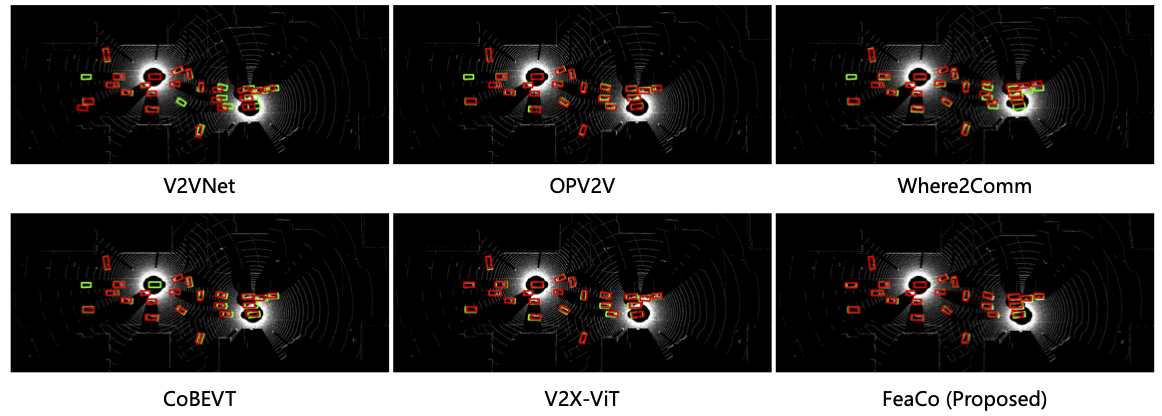

Following shows the 3D detection results in the Bird’s Eye View (BEV) format on the two subset of OPV2V dataset. Green and red boxes represent Ground Truth and prediction results, respectively. The similarity of these boxes reflects the performance of the testing methods. To assess the robustness of our FeaCo in high noise environments, the localization and heading error deviation is set to be 1.0.

Our PRM efficiently rectifies the pose error for a precise fusion process, while our multi-scale CAM ensures the performance of detecting vehicles at various distances. Our FeaCo effectively predicts vehicles in complex situations with high pose noise especially for localization error.

Recommended citation:

@inproceedings{gu2023feaco,

title={FeaCo: Reaching Robust Feature-Level Consensus in Noisy Pose Conditions},

author={Gu, Jiaming and Zhang, Jingyu and Zhang, Muyang and Meng, Weiliang and Xu, Shibiao and Zhang, Jiguang and Zhang, Xiaopeng},

booktitle={Proceedings of the 31st ACM International Conference on Multimedia},

pages={3628--3636},

year={2023}

}